Robots.txt file allows you to provide instructions about the crawling of web pages to search engine robots. The basic functionality of an SEO term 'Robots.txt' is supported by all major search engines. This file looks very simple but can seriously harm your website if you make any mistakes in it. Make sure that you read and understand this blog before you start using Robots.txt:

A. What is Robots.txt?: A text file that can also be called the 'Robots Exclusion Protocol' is understandable by search engine spiders. Robots.txt follows a strict syntax and thus you should not make any errors in this file.

B. Where can I place Robots.txt file?: You should always add Robots.txt file in the root of your domain. For instance, your domain is www.yourdomain.com, then you can locate Robots text file at https://www.yourdomain.com/robots.txt. The name of the file is case sensitive and thus Digital Marketing Boy suggests you get that right otherwise it would not work.

C. What does Robots.txt do?: Robots.txt informs search engines about the web page URLs that are allowed for indexing. Any changes in this file will be reflected immediately because it gets refreshed several times a day.

D. Advantage of Robots.txt: SEO experts can easily block search engines from crawling problematic pages of your website by implementing Robots.txt file. For example, all web page URLs containing a query string can be blocked with the addition of line Disallow: /*?* in Robots.txt file.

E. Disadvantage of Robots.txt: Search engines cannot pass link value to page Y linked from page X if they cannot crawl page X. You will lose the value of the backlinks passed to a web page once you block it with Robots.txt file.

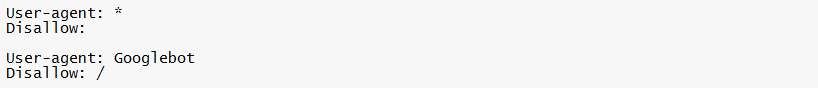

F. What is Syntax of Robots.txt?: A Robots.txt file includes several blocks of directives and each block starts with a User-agent. The following are examples of blocks.

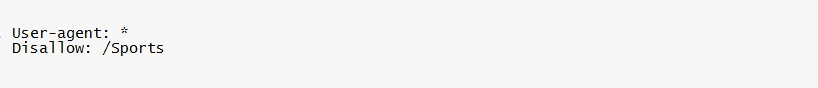

In a Robots.txt file, the directives (Allow, Disallow, User-agent) should not be case sensitive while values are case sensitive. You can use the line User-agent: Googlebot if you want to instruct Google spider about the crawling of your web pages. The commonly used User-agents are: Googlebot (Google), msnbot or bingbot (Bing), slurp (Yahoo), AdsBot-Google (Google AdWords) and more. A block containing User-agent: * and an empty Disallow indicates that all your web pages are not blocked by search engine robots. The below example would block all search engines from crawling the Sports directory on your website and everything in it.

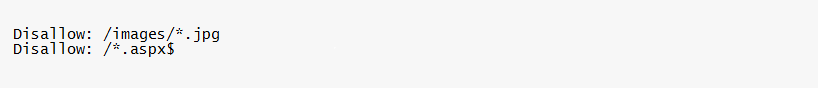

G. Can I use Regular Expressions?: Yes, you can use * and $ in Robots.txt file for specific circumstances. Consider the following lines:

The first line contains * that describes any file name. Thus a file /images/baseball.jpg will be blocked from being crawled. Symbol $ in the second line indicates the end of a web page URL. This means /sports.aspx cannot be indexed but /index.aspx?y=4 could be.

H. How to Validate your Robots.txt?: SEO experts can use various tools to validate your Robots.txt file. Digital Marketing Boy would like you to use one of the powerful SEO tools 'Google Search Console' for Robots.txt validation.

Search engine experts should thoroughly check Robots.txt file before going LIVE with it. In Robots.txt, you can use more directives such as Allow, host (supported by Yandex), crawl-delay and sitemap.

Here you will discover the list of terms that are related to the world of SEO.

XML Sitemap has a great significance for search engine optimization (SEO).

Optimizing Meta descriptions is an important aspect of On Page SEO.